August 22, 2025 by Yotta Labs

Scaling RLHF Training Without the Complexity

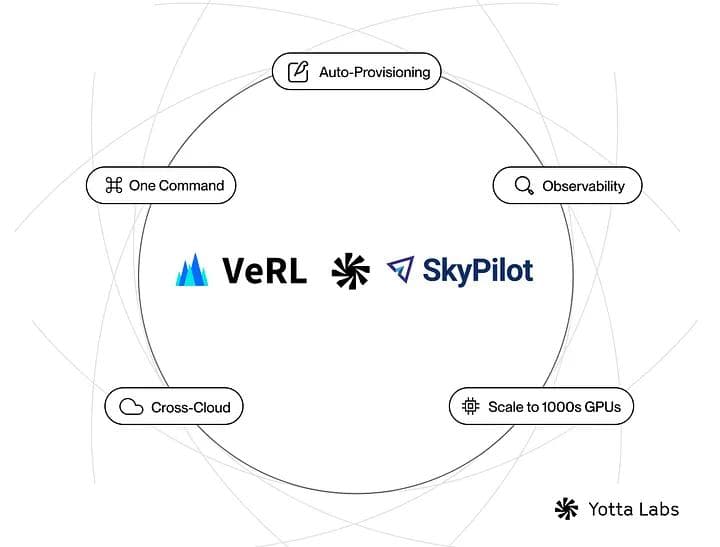

Yotta Labs contributed to a new SkyPilot integration to VeRL (Volcano Engine Reinforcement Learning), the open-source RL library for LLMs. Now, with just one YAML + one command, you can launch and scale multi-node RLHF training jobs across AWS, GCP, Azure, Kubernetes, or Yotta Labs infrastructure.

Yotta Labs Enables One Command to Train Them All: Scaling RLHF with VeRL, Ray, and SkyPilot

Training large language models (LLMs) with reinforcement learning from human feedback (RLHF) is powerful — but painfully complex to scale. You need Ray clusters, cloud provisioning, multi-node orchestration, IP wrangling, dependency syncing… and that’s before the training even starts.

We’re fixing that.

Today, VeRL (Volcano Engine Reinforcement Learning), an open-source RL library for LLMs, just got a massive upgrade. Thanks to a new SkyPilot integration contributed by Yotta Labs, VeRL users can now launch and scale multi-node RLHF training with a single command.

One YAML. One Command. Fully Distributed Training.

VeRL now supports instant Ray cluster provisioning with SkyPilot. Just define your cluster in YAML, then launch:

sky launch -c VeRL VeRL-cluster.yml

That’s it. Ray head and worker nodes are spun up automatically, the training environment is deployed, and your job starts running — with full observability out of the box.

No cloud console. No SSH. No headaches.

Why This Matters

✅ Multi-node training, zero setup

Launch distributed training on 2 or 200+ nodes — across AWS, GCP, Azure, Kubernetes, or Yotta Labs infrastructure — with no manual provisioning.

⚙️ Ray Dashboard included

Get real-time training visibility the moment your job starts. Resource usage, task status, and more — built in.

🚀 Scales to 8192+ GPUs

Change one line in your YAML to scale from a single H100 to thousands of H100s. No custom infra logic needed.

🔁 Reproducible and portable

Cluster definition lives in one declarative file. Share it, re-run it, migrate it — no rewriting or cloud-specific configs.

Built for LLM Builders

This integration isn’t academic. It’s built for real-world teams doing real work with LLMs and RLHF.

Whether you’re:

- Training a reward model on preference data

- Running PPO or DPO across multiple nodes

- Scaling fine-tuning with complex data pipelines

You can now go from zero to fully operational cluster in seconds — using open-source tools that work across any cloud.

Get Started

- 📘 VeRL GitHub Repository

- ⚡ SkyPilot YAML Config for VeRL

- 🧠 Yotta Labs: AI Infrastructure Without the Complexity

What’s Next

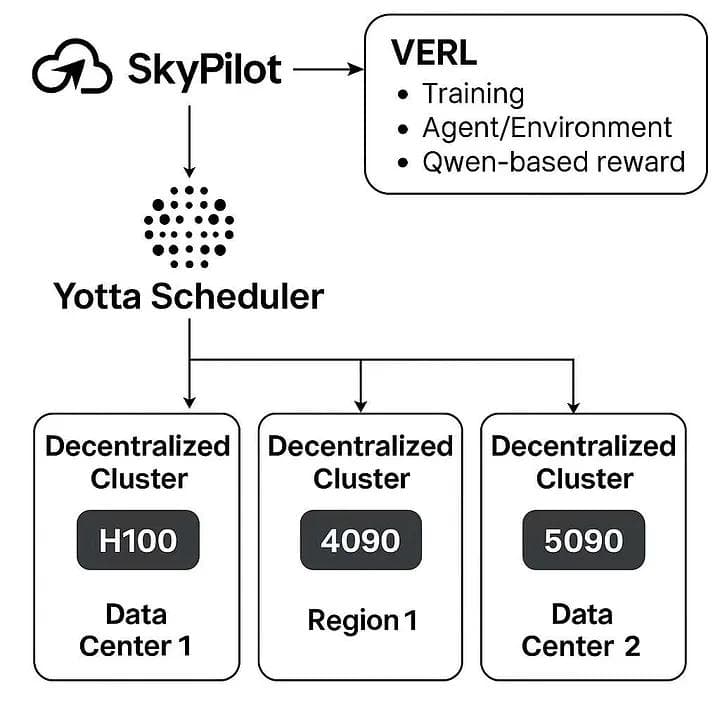

Training at scale with sufficient compute is often constrained by the limitations of a single data center — whether due to capacity shortfalls, cost inefficiencies, or geographic isolation from optimal resources. By enabling seamless, decentralized orchestration across multiple, geographically dispersed clusters, Yotta Labs can unlock access to the most cost-effective and performant compute nodes available worldwide, dynamically routing workloads to where they can run fastest and cheapest.

Yotta Labs is preparing to release a decentralized training scheduler that extends beyond traditional cloud-cluster orchestration. While today’s VeRL + SkyPilot integration makes it simple to spin up distributed RLHF training across providers, the next step is breaking data center silos and allowing training workloads to run across heterogeneous, geographically distributed clusters.