Unlock the Power of LLM with Your Own Data on

Yotta's Serverless Platform.

Yotta's Serverless Platform.

Achieve higher accuracy on your domain tasks with fine-tune

Product Highlights

Serverless

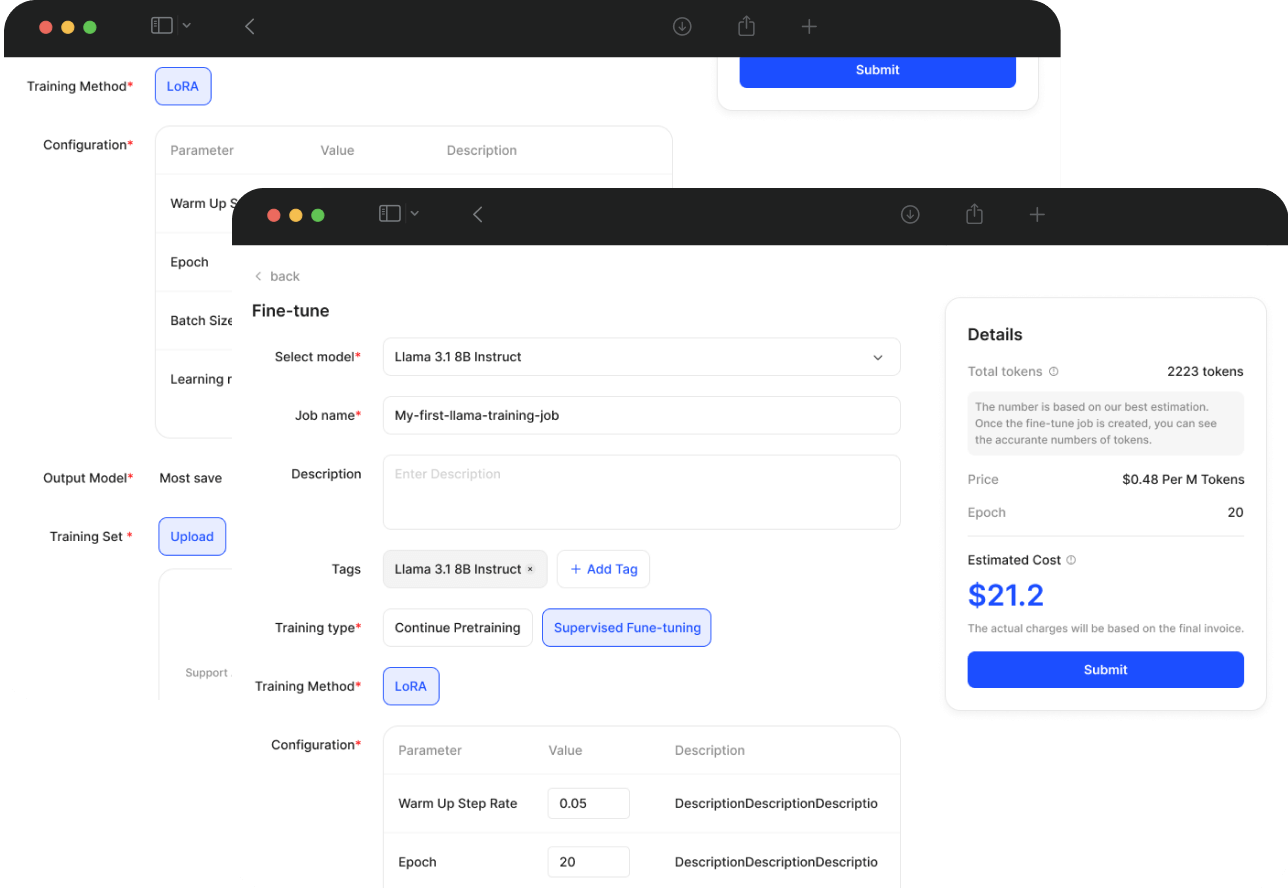

No need to manage hardware and forget about choosing GPU types and GPU quantities. Charged based on the number of tokens in the datasets and training epoches

Elasticity

Scheduling your fine-tuning jobs across a decentralized network of GPUs. Effortlessly scale as needed, with automatic resource allocation to match the demands of your workload.

Hassle-free

The platform has built-in fault tolerance mechanisms and seamlessly handles hardware unavailability and failures, ensuring uninterrupted model fine-tuning without manual intervention.

HOW IT WORKS

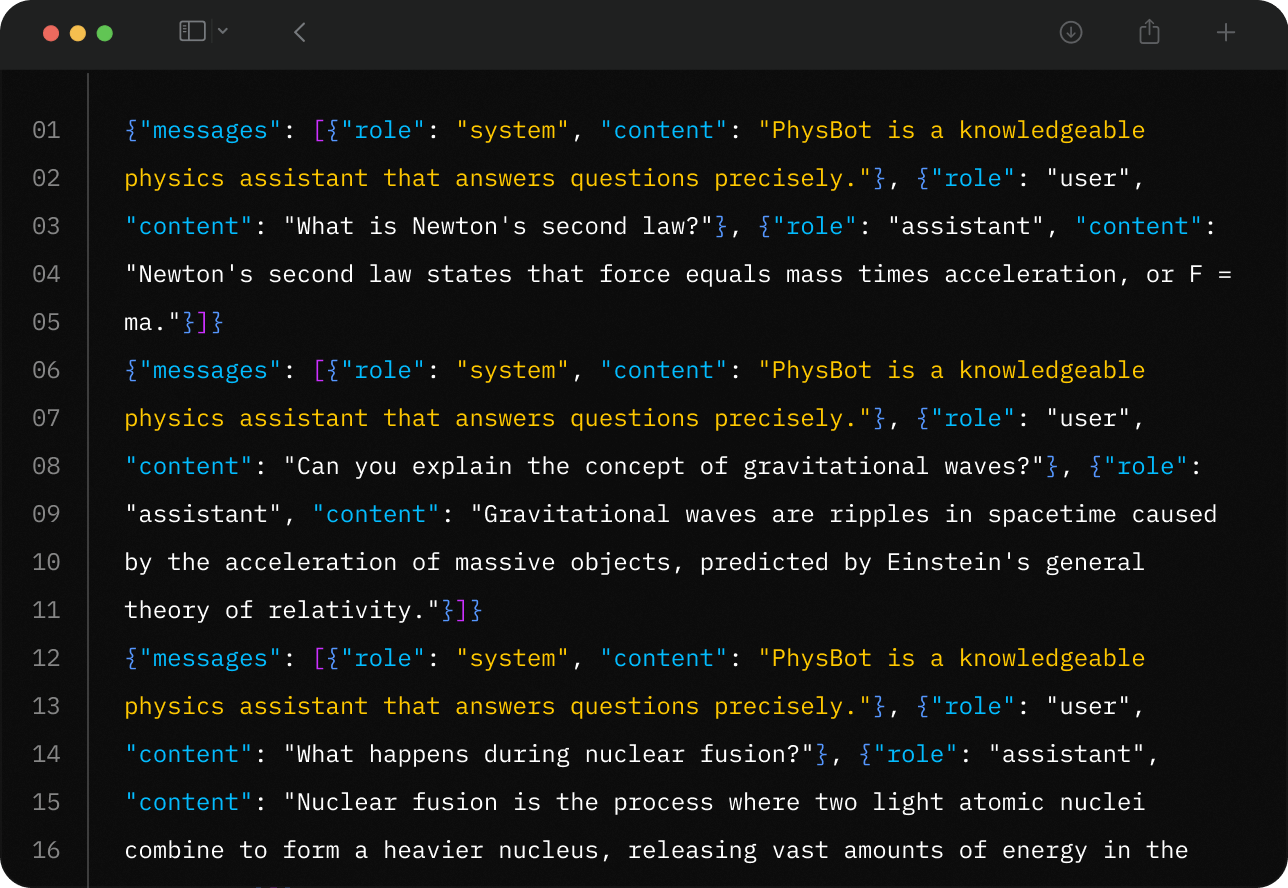

Begin with preparing your dataset by following the prompt templates of the model to organize your data a .jsonl file.

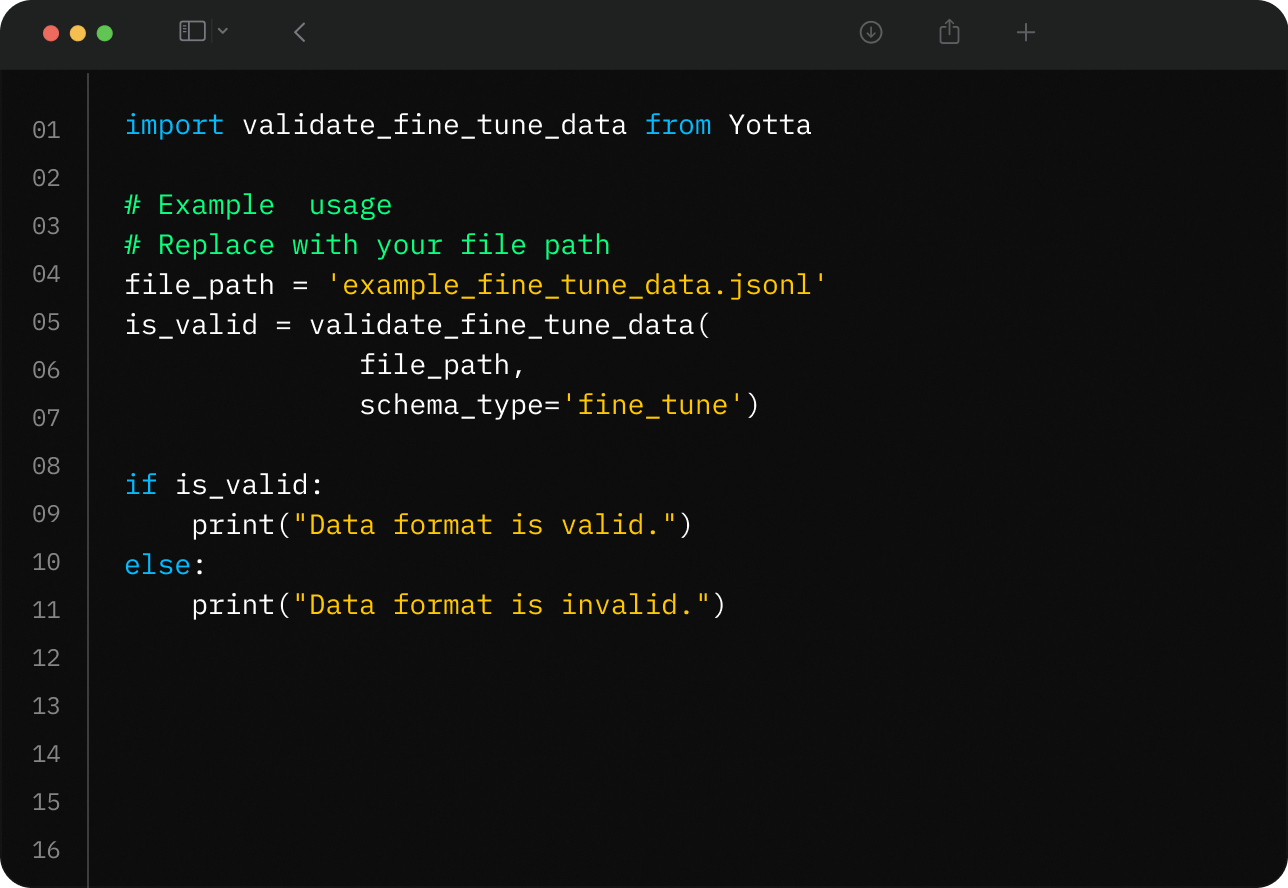

Validate the format of your dataset and upload it to the platform

Start fine-tuning with a single command or through an intuitive UI - with a comprehensive list of customizable hyper parameters.

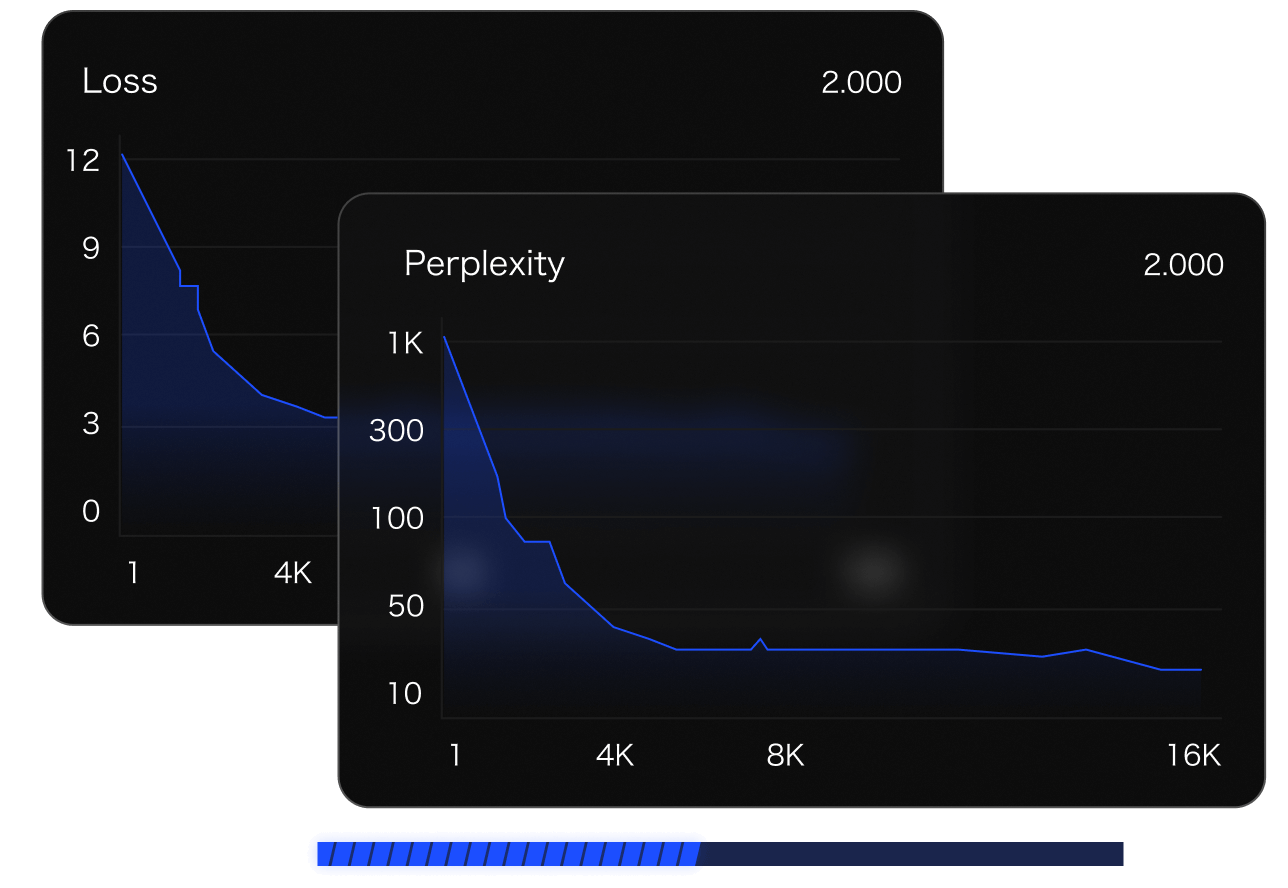

Monitor progress via Weights & Biases, pause and deploy checkpoints at any time, and test with our AI explorer.